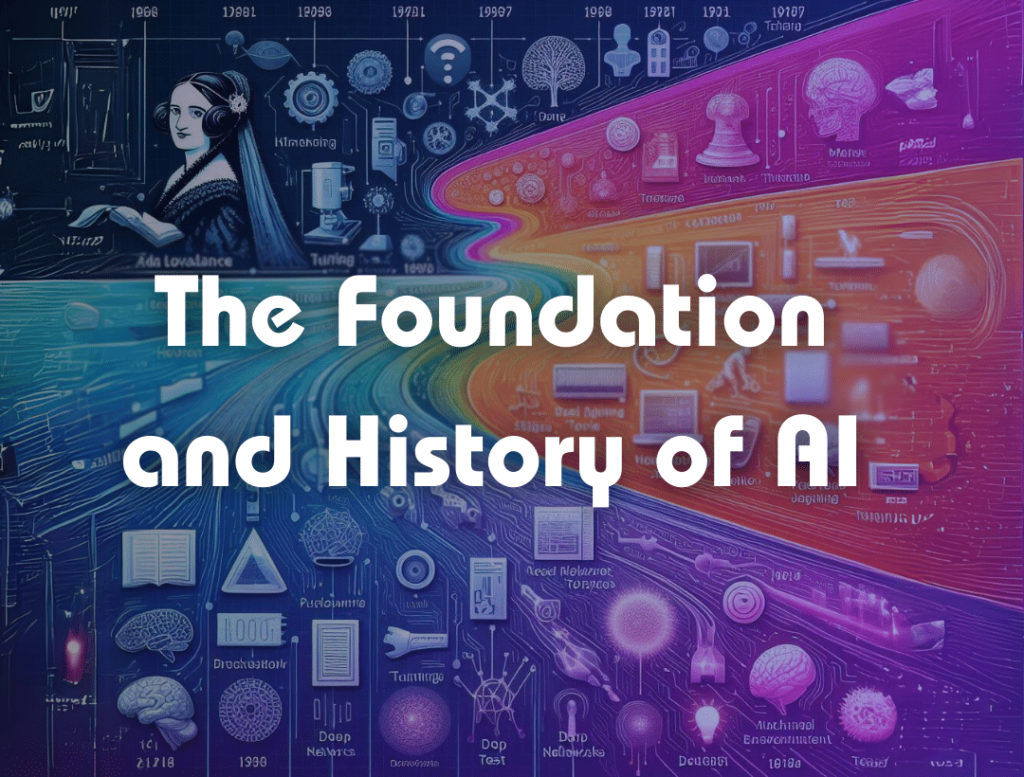

History of Artificial Intelligence – A Timeline: The dream of creating intelligent machines has captivated us for centuries. Let’s journey through the milestones that brought us to the modern AI era:

Table of Contents – History of Artificial Intelligence

The Seeds of AI

Antiquity: Myths across cultures feature animated statues and artificial beings, revealing an early fascination with manufactured intelligence. The ancient Greek myths of Hephaestus’s automatons and the Jewish legend of the Golem are just a few examples. These narratives suggest that humans have long contemplated the concept of artificial life and intelligence.

Medieval Period: Thinkers such as Al-Jazari experiment with mechanical devices like automata, demonstrating an early understanding of the principles of automation and control. These inventions, though simple by today’s standards, laid the groundwork for later advancements in robotics and artificial intelligence.

1600s – 1800s: Philosophers like Gottfried Wilhelm Leibniz and George Boole lay the foundations of symbolic logic and computation, providing theoretical tools for future AI. Leibniz’s development of binary arithmetic and Boole’s work on algebraic logic were instrumental in shaping the way we conceptualize computation and information processing.

The Birth of Computing

1822: Charles Babbage designs the Difference Engine, a proto-computer designed for calculations. Although the machine was never completed during Babbage’s lifetime, his designs are considered precursors to modern computers and represent a significant milestone in the history of computing and the History of Artificial Intelligence.

1843: Ada Lovelace, collaborating with Babbage, envisions machines capable of going beyond mere number-crunching, laying groundwork for modern programming. Lovelace’s insights into the potential for computers to manipulate symbols and perform a variety of tasks laid the foundation for the field of computer science and, by extension, artificial intelligence.

1936: Alan Turing introduces the Turing Machine, a theoretical model for a universal computer, underpinning AI development and the History of Artificial Intelligence. Turing’s conceptual framework provided a blueprint for designing and analyzing algorithms, shaping the trajectory of computational research and paving the way for the development of artificial intelligence.

1943: Warren McCulloch and Walter Pitts propose a model for artificial neurons, inspired by the workings of the brain. Their work laid the foundation for neural network theory, which has become a cornerstone of modern machine learning and AI research.

A Field is Born

1950: Turing publishes “Computing Machinery and Intelligence,” proposing the Turing Test as a measure of machine intelligence. This seminal paper sparked widespread interest in the possibility of creating machines that could exhibit human-like intelligence, marking the formal beginning of the field of artificial intelligence.

1955: John McCarthy coins the term “Artificial Intelligence.” McCarthy, along with other pioneers such as Marvin Minsky and Herbert Simon, sought to create machines that could perform tasks traditionally requiring human intelligence, such as problem-solving and decision-making.

1956: The Dartmouth Summer Research Project on Artificial Intelligence is held, officially launching the AI field. Organized by McCarthy, Minsky, Nathaniel Rochester, and Claude Shannon, the conference brought together researchers from various disciplines to explore the possibilities of artificial intelligence, laying the groundwork for future advancements in the field.

Early Progress and Setbacks

1950s – 1960s: Rule-based AI systems achieve limited success in games like checkers and basic language translation. Early AI researchers focused on developing rule-based systems that codified human expertise in specific domains. While these systems showed promise in limited contexts, they often struggled to adapt to new situations or handle ambiguity.

1960s: Growing optimism leads to overpromises about AI potential. A resultant lack of breakthroughs leads to funding cuts, initiating the first “AI Winter”. Despite early enthusiasm for the field, progress in artificial intelligence was slower than initially anticipated, leading to a period of disillusionment known as the first AI winter. Funding for AI research dried up, and many researchers turned their attention to other areas of computer science.

1970s: Expert systems emerge, attempting to codify specialized knowledge, but ultimately prove too inflexible. Expert systems were an early attempt to capture human expertise in the form of rules and heuristics that could be applied to specific problem domains. While these systems demonstrated some success in areas such as medical diagnosis and financial analysis, they were limited by their inability to adapt to new information or learn from experience.

The Rise of Machine Learning

1980s: Renewed interest in neural networks sparks progress, though computational limitations still exist. A second “AI winter” follows. Despite the setbacks of the first AI winter, interest in artificial neural networks persisted among a small group of researchers. Advances in computational power and new learning algorithms led to renewed optimism about the potential of machine learning, sparking a resurgence of interest in AI research.

1990s: Machine learning gains traction. Algorithms fueled by increasing amounts of data improve pattern recognition. The proliferation of digital data and advancements in computing technology fueled rapid progress in machine learning during the 1990s. Researchers developed more sophisticated algorithms capable of learning from large datasets, leading to breakthroughs in areas such as speech recognition, image classification, and natural language processing.

1997: IBM’s Deep Blue defeats world chess champion Garry Kasparov, a watershed moment for AI. Deep Blue’s victory over Kasparov demonstrated the power of machine intelligence in a domain traditionally thought to be uniquely human. The match captured the public’s imagination and sparked renewed interest in artificial intelligence research.

The AI Explosion

2000s: The rise of the internet yields vast amounts of data, perfect for advanced learning algorithms. The proliferation of internet-connected devices and digital services led to an explosion of data in the early 21st century. This wealth of information provided fertile ground for machine learning algorithms, enabling new applications in areas such as online recommendation systems, targeted advertising, and personalized healthcare.

2010s: Deep learning, with sophisticated neural networks, revolutionizes areas like image recognition, natural language processing, and machine translation. Deep learning, a subfield of machine learning inspired by the structure and function of the human brain, emerged as a dominant paradigm in AI research during the 2010s. Breakthroughs in neural network architecture and training algorithms led to significant advances in tasks such as image recognition, speech recognition, and language translation.

2016: Google’s AlphaGo defeats a top professional player at the ancient and complex strategy game of Go, a feat deemed impossible only a few years prior. AlphaGo’s victory over Lee Sedol, one of the world’s best Go players, showcased the remarkable progress of AI systems in tackling complex, real-world problems. The match marked a significant milestone in the development of artificial intelligence and underscored the potential of machine learning techniques to surpass human performance in challenging domains.

AI Today and Beyond

2020s: AI is now woven into our daily lives – personalized recommendations, fraud detection, self-driving cars, and more. Artificial intelligence has become increasingly integrated into everyday technologies and services, from virtual assistants and recommendation systems to autonomous vehicles and medical diagnostics. These AI-powered applications have the potential to transform industries and improve the quality of life for people around the world.

The Future: Profound questions remain about AI’s role in society, alongside the potential for further revolutionary breakthroughs. As artificial intelligence continues to advance, questions about ethics, accountability, and the societal impact of AI technologies become increasingly urgent. Researchers and policymakers must grapple with complex issues related to fairness, transparency, and privacy to ensure that AI benefits society as a whole.

The Ongoing Quest

The history of AI is a story of perseverance, punctuated by successes and setbacks. Driven by a desire to replicate, and perhaps even surpass, our own intelligence, we continue to push the boundaries of artificial minds. Where this journey will take us remains one of the most compelling questions of our time. As we navigate the complexities of creating intelligent machines, it is essential to remain mindful of the ethical and societal implications of our technological advancements. Only by approaching AI development with humility, responsibility, and foresight can we unlock its full potential for the benefit of humanity.

Thank you for joining us on this captivating journey through the History of Artificial Intelligence! Stay tuned for more fascinating insights and engaging content every day at aismartclass. Check out our article to know more about artificial intelligence.

very nice